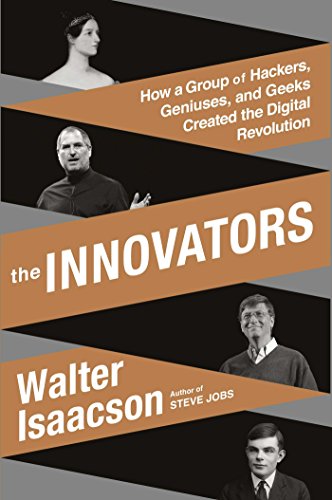

WALTER ISAACSON is America’s leading chronicler of Great Men—or “geniuses,” as his publisher describes them in a PR note accompanying his latest book, The Innovators. A former editor in chief of Time, which made its name by designating Great Men (and very occasionally Women) “People of the Year,” Isaacson specializes in expansive, solidly researched middlebrow histories, the kind that appear under your father’s Christmas tree or on a talk-show host’s desk. Benjamin Franklin, Albert Einstein, Henry Kissinger, and Steve Jobs have all received the Isaacson treatment, with a handful of others coming in for group portraits. The Innovators belongs to this latter category; it’s a story of what Isaacson calls “collaborative creativity” and the origins of the Internet and digital eras. There are dozens of characters here, beginning with Ada Lovelace and Charles Babbage in the mid-nineteenth century and ending with Messrs. Gates, Jobs, Wales, Brin, and Page on this side of the twenty-first.

As a vast survey of the inventions and personalities that eventually brought us networked computers in every home, purse, and pocket, The Innovators succeeds surprisingly well. Isaacson has pored over the archives and interviewed many of the principals (or at least their surviving colleagues and descendants). He also has an assured grasp of how these various technologies work, including the recondite analog proto-computers invented in midwestern basements and dusty university labs during the interwar period. Although many of these machines didn’t survive their creators, they informed subsequent, more celebrated inventions while also showing that, in this long period of tinkering and experimentation, cranks and hobbyists produced some important breakthroughs.

But Isaacson’s book is more than a history of how we came to live digitally, and that is its chief problem. By presenting itself as an expert’s guide to how innovation works—and by treating “innovation” as a discrete, quantifiable thing, rather than, say, a hazy, ineffable process that has been condensed into a meaningless corporate buzzword—the book founders on the shoals of its self-proclaimed mission. The ship need not be scuppered entirely; there’s much to be learned in these pages. And there is something worthwhile in this survey of the networked creation of ideas and inventions—for all the solitary, heroic connotations of the term genius, The Innovators is a chronicle of intensive group collaboration, as well as the often tangled evolution of technological change across the generations (and via more than a few patent disputes). Isaacson rightly points out that nearly all of the important inventions in the history of computing had many fathers, from the microprocessor to the Pentagon’s proto-Internet operation, arpanet. These academics, engineers, mathematicians, Defense Department bureaucrats, and wild-eyed tinkerers came together in sometimes uneasy joint enterprises, but the process was undeniably, and deeply, collaborative—and not just in the cross-disciplinary petri dishes of Bell Labs and Xerox PARC, where so many important technological breakthroughs were devised. These collaborations continued as their guiding conceptual insights found popular (and profitable) application as objects of consumer delight—a revolution that took place at the behest of a new breed of knowledge geek, people like Jobs and Nolan Bushnell, founder of Atari.

In such detailed stories of sudden inspiration; of late nights spent bug-hunting in university labs; of a young Bill Gates running circles around IBM executives, who, unlike him, had no idea that software, not hardware, would dictate the future of computing—here is where “innovation” becomes something fascinating and specific, precisely because the word itself isn’t used. These are simply tales of human drama, contextualized within the arc of recent American industrial history. Yet as the book’s five hundred–plus pages unwind, Isaacson interrupts himself to present small bromides about what it means to innovate and what we might learn from these innovators, our presumed betters. “Innovation requires articulation,” he tells us, after explaining how the main strength of Grace Hopper, a trailblazing computer scientist for the US Navy, was her ability to speak in the languages of mathematicians, engineers, programmers, and soldiers alike. “One useful leadership talent is knowing when to push ahead against doubters and when to heed them,” he offers later.

The book is peppered with these kinds of passages, which often intrude on the narrative, depriving us of moments of real emotional power. Isaacson also tends to rush through some of The Innovators’s finer passages, material that could transcend rote technological history and show us something personal. He offers a believable sketch of the often cloistered life of Alan Turing, the brilliant British mathematician, cryptographer, and, ultimately, martyr to a bitterly conservative society. Having played an important role both in the development of computing and in Britain’s World War II code-breaking operations, Turing was arrested for “gross indecency”—that is, being a homosexual—in 1952. Quiet about but content with his identity, he pleaded guilty all the same, perhaps to avoid stirring up more trouble. After a trial, the court offered him an awful choice: a year in prison or injections of synthetic estrogen—essentially, chemical castration. He chose the latter. Two years later, like a twentieth-century Socrates, he committed suicide by biting into an apple he had laced with cyanide.

Isaacson’s recounting of Turing’s end is hardly longer than my own, and that is unfortunate. It is among the most disturbing episodes in postwar British history and deserves more explication and indignation. Though one hesitates to make it stand for something too large, perhaps this veritable homicide at the heart of the Anglo-American quest for technological mastery does represent something. After all, as Isaacson writes, “war mobilizes science,” and many of the great technological breakthroughs of the past century were subsidized, if not wholly steered, by the defense establishment. (To name one: The original microchips found homes in Minuteman ICBM guidance systems.) Isaacson gestures in this direction, making some references to the military-industrial complex and showing that while the men behind ARPANET—nearly all of them working for the Pentagon or under the sponsorship of Pentagon grants—thought they were building a distributed system for academic collaboration, senior Defense officials pushed the project because they thought it would provide a distributed command-and-control system that would survive a nuclear attack. Each side seems to have thought it was using the other.

[[img]]

The defense industry, then, becomes the subject of a submerged narrative in The Innovators, one that’s never fully brought into the light. Perhaps it’s uncomfortable to think that some of our most remarkable technologies not only owe themselves to the ever-churning American war machine but were in fact funded and designed on its behalf. Accepting this symbiosis—which some have, like Steve Blank, a tech-industry veteran and the author of a popular online lecture, “Hidden in Plain Sight: The Secret History of Silicon Valley”—would also mean tamping down the perpetual hosannas heaped on the digital trailblazers and asking some harder questions. For example, do they, and their successors, bear any responsibility for the current state of the Internet, in which a tool designed for liberating people from the strictures of physical media and geography has been corrupted into a mass-surveillance apparatus collecting incredible amounts of personal data for corporations and intelligence agencies alike? Is Alan Turing’s story an aberration, or perhaps a toll extracted by this devil’s bargain?

Probing these questions would mean chucking aside all the jabber about innovation. It would mean that technological development is also a human story—one that involves politics, war, culture, discrimination, social upheaval, and a great deal of human exploitation thousands of miles down the production line, in Congo’s coltan mines and Shenzhen’s brutal factories. We hear little about these messier elements from Isaacson, except for an enjoyable tour through the Bay Area counterculture, in which Ken Kesey’s acid tests become a kind of analog trip through the doors of perception for these would-be digital psychonauts. I think that says something both about Isaacson’s worldview—moderate and apolitical in the thought-leader mode, as might be expected of the CEO of the prestigious Aspen Institute think tank—and about what has become the standard style of our big histories. They are supposed to record the past but not account for it. They tend toward the instructional: This innovation talk is really a thinly disguised form of self-help. Here is what makes a good research group. Here are the advantages of filing patents. Here are the types of people you need to start a company—a gifted, shy, freethinking engineer like Steve Wozniak and a sociopathic lapsed-hippie cutthroat like Steve Jobs. Sure, you can invent something and give it away, like free-software advocates Richard Stallman and Linus Torvalds. Or you can start calling people “shithead,” steal their ideas, and make a billion dollars—a précis that, I was sad to discover, describes not only Jobs but several other figures of industry lore.

Don’t heap all the blame on Isaacson, for he practices a rarefied form of a popular, desultory art. Its baser manifestations can be found in every look-to-the-horizon TED talk and, conveniently, in a new book by the journalist and media entrepreneur Steven Johnson. Johnson is the best-selling author of several other popular science works, and his latest, How We Got to Now, is a multimedia affair: A six-part miniseries will air on PBS this fall, and the Knight Foundation (the same outfit that gave a defrocked Jonah Lehrer $20,000 for a speech) has ponied up $250K for an “online innovation hub” connected to the project.

Here, in two hundred–odd pages stretched—through the magic of typography and archival photos—to three hundred, is the real stuff. Pure, uncut innovation-speak: Scratch the pages and take a hit.

How We Got to Now takes six innovations, broadly defined (Glass, Cold, Sound, Clean, Time, and Light), and explains how they influenced the world. To do this, Johnson calls on what he describes as the “hummingbird effect,” a more modest version of the butterfly effect, but still showcasing a taste for speculative, associational thinking. There are times when this methodology works. For instance, when Johnson writes that the invention of glass led to telescopes and microscopes, which in turn radically altered our view of the world and our place in it, one can nod in appreciation.

Borrowing a term from the biologist Stuart Kauffman, Johnson describes these progressions as examples of the “adjacent possible.” Certain technologies dilate our imaginations, showing us potential that we hadn’t envisioned. The printing press, he argues, played this role in the dissemination of eyeglasses and in how people saw their world. Because most people had never owned any books before, they didn’t realize that their vision was imperfect, much less that they had a reason, or the means, to correct it. This makes a kind of sense, all the more so when backed up with the choice historical anecdotes that Johnson digs up. But he tends to belabor this concept beyond its limits, particularly when, in the spirit of marveling at the wonders of technology—which seems to be the book’s raison d’être—he makes some far-fetched connections.

For instance, Johnson traces the rise of postmodern architecture to 1968, when the architects Robert Venturi and Denise Scott Brown visited Las Vegas and fell in love with neon light. But that light owed something to Tom Young, who decades earlier had started a neon-sign business in Utah and brought his buzzing bounty to Sin City. And Young owed his knowledge of neon to Georges Claude, who demonstrated neon light in Paris in 1910. So a schematic history of postmodern architecture, according to Johnson, goes something like this: Georges Claude–> Tom Young–> Las Vegas–> postmodern architecture. It’s a history of innovation as a loosely connected chain of causality. Johnson offers a dubious sense of teleological progress, privileging the idea of innovation over what an innovation really meant. So, in this instance, postmodern architecture becomes more important for its place in this history-as-a-series-of-inventions than for the avant-garde buildings and social change it produced.

These flights of associative fancy are one of the book’s most frustrating and, alas, most persistent features. Johnson says that they “might seem like yet another game of Six Degrees of Kevin Bacon,” and I can’t help but agree. Why, I wonder, isn’t Johnson content with making us appreciate these inventions and the peculiar characters behind them? Why does he push us, like a priest escorting a truant to the altar, toward an unearned sense of awe?

An answer might be found in the book’s introduction. Johnson briefly talks about the “externalities and unintended consequences” of technological development. He presents some incisive questions, ones that might be germane to his book: “Cars moved us more efficiently through space than did horses, but were they worth the cost to the environment or the walkable city? Air-conditioning allowed us to live in deserts, but at what cost to our water supplies?” These aren’t frivolous concerns. They are, in fact, at the core of what it means to be a serious, worthwhile thinker about technology and its place in our world—a world that, as his mention of the environment indicates, doesn’t fully belong to us. But Johnson is content to brush off his own provocation: “This book is resolutely agnostic on these questions of value,” he says. “Figuring out whether we think the change is better for us in the long run is not the same as figuring out how the change came about in the first place.”

Sure, but can we not have both? Why do these people equate being apolitical with enlightenment? Why, when we enter the hushed cathedrals of techno-wisdom, must we check our values, concerns, and humanity at the door?

After all, Johnson is not content simply to revel in the well-varnished glories of the past. His sprightly account of Frederic Tudor’s adventures in the ice business (a series of spectacular failures followed by even more spectacular success) leads to the advent of refrigeration, which allowed for the creation of industrial farms, which brought us frozen food, improving the diets of people who didn’t have prior access to fresh fruits and vegetables. These innovations in cold technology additionally brought us air-conditioning, which has improved the comfort and health of millions of people. It has also, as Johnson mentions, allowed for the growth of enormous cities in tropical climates. Or, as he puts it—far too grandly and without evidentiary support—“what we are seeing now is arguably the largest mass migration in human history, and the first to be triggered by a home appliance.”

Johnson doesn’t consider that the very features he celebrates—industrial farming, the boom in air-conditioning in the developing world, the growth of megacities powered by fossil-fuel plants—are helping to push our planet toward environmental collapse. If you were driving toward a cliff, would you still gaze at the beautiful mountains in your rearview mirror? Or would you perhaps wonder why you were still driving?

Another bizarre but characteristic moment emerges in Johnson’s tour through the history of radio. Lee De Forest, a pioneer of the medium, listened aghast as jazz swept the airwaves of late-’20s America. He wrote a letter to the National Association of Broadcasters, speaking of radio as if he had birthed it himself: “You have debased this child, dressed him in rags of ragtime, tatters of jive and boogie-woogie.” The letter is, of course, racist. But that’s not how Johnson sees it. His own response is brilliantly technocratic. “In fact,” he writes, “the technology that De Forest had helped invent was intrinsically better suited to jazz than it was to classical performances. Jazz punched through the compressed, tinny sound of early AM radio speakers.” By way of technical fact, this may be true, and it certainly matters. But it also matters that an early, influential figure in radio was an unabashed racist and that, in a dose of deserved irony, his own invention helped promulgate a culture he despised. A fuller accounting of this history would acknowledge that. The closest Johnson comes to this is by saying that “jazz stars gave white America an example of African-Americans becoming famous and wealthy and admired for their skills as entertainers rather than advocates.” There is a lot of nonsense packed into these remarks, but what puzzled me most was the tossed-off use of “advocates,” with the attendant implication that advocacy is somehow unseemly.

I’ve decided that the champions of innovation-speak are as confused by the subject as anyone. To them, technology is a thing with a life of its own. And it can evidently only be understood via the ministrations of a class of reverent spiritual adepts, duly catechized in treating its essence as holy and its creators as demigods. And so their tales are ultimately as simple, as explicit in their lessons, as a sacred text. Innovation is the irruption of God in the machine, but its supplicants can only describe it in platitudes designed to read like koans.

These writers have been captured, in other words, by their own techno-determinism, which has blinded them from apprehending any other path. That is why Johnson stitches together his elaborate chains of association into little more than reassuring pieties of accidental or unintended progress. It’s also why, when Isaacson says that Lee Felsenstein, an important figure in the development of the PC, saw the utopian promise of print media “turn into a centralized structure that sold spectacle,” the author fails to shriek in recognition. For isn’t that exactly what’s happened to the Internet? Does that not perfectly express today’s digital media, with its dependence on big telecoms, advertising, and virality? But to recognize these truths would require pulling back the silicon curtain and realizing that, along with the magic, there exists here as much ugliness as in any other realm of human experience.

Jacob Silverman’s book, Terms of Service: Social Media, Surveillance, and the Price of Constant Connection, will be published next year by Harper Collins.