AT THE BEGINNING OF DECEMBER, music-streaming behemoth Spotify laid off 1,500 employees. It was its third set of axings last year in response to the tightening of capital markets, altogether adding up to more than a quarter of its global staff. But this round was different, because it included Glenn McDonald.

McDonald, whom I first became aware of when he was a music blogger in the late 2000s, joined Spotify in 2014 as part of its acquisition of the MIT-offshoot, music-research company Echo Nest. He became the streaming giant’s “data alchemist,” which meant he conducted experiments to transmute all the information about musical styles and listening patterns that Spotify accumulates through its operations into usable tools and insights for musicophiles. His flagship creation was a site called Every Noise at Once, a dizzyingly vast, word-cloud-style array of global genres, subgenres, and microgenres, mapping how they intersect—the path, for instance, from “new italo disco” to “deep hardtechno” to “acidcore” to “electronica Peruana” to “workout product”—and allowing users to hear samples from thousands of such niches. His programming also generated New Particles, the most comprehensive weekly new-release list anywhere, compiling hundreds of new songs and albums by genre every Friday, a tally that I know from experience is nearly impossible for any human to keep up with. His data chart Every Place at Once tracked the music currently most popular in cities around the world, from Berlin to Mumbai to Fargo. These tools and others like them still exist on McDonald’s website, furia.com, but since December 4, they’re no longer being fed and updated from Spotify’s databases. Unless another suitable backer comes along soon, they will go inert.

It’s a satisfying conspiracy theory that someone in power at Spotify actively wanted to squash these experiments, perhaps because their expansiveness put the company’s regular products to shame. More likely he was felled by the blunt urge to cost-cutting in a company that as yet doesn’t turn a consistent profit despite its dominant market share. Still, that blinkeredness is telling. Spotify is constantly hit with bad publicity about the low royalties it pays musicians. Critics also charge that its automated algorithms foster passive background listening that devalues music as a whole. The company could have tried to counter in part by promoting McDonald’s demonstrations of the myriad ways its systems can be mind-blowing partners for sonic and even sociological adventurers. (He also did a lot of less publicly visible in-house work.) But no, better for the stock price to eliminate one more midrange salary.

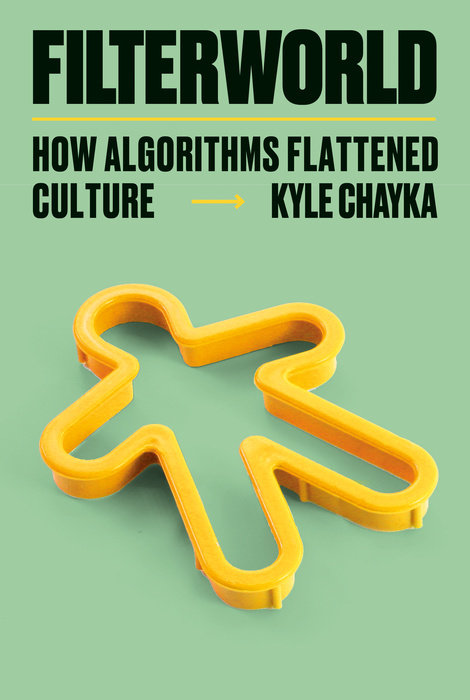

In some ways, as goes Spotify, so go we all. Barely a decade after the long-dreamed-of “universal jukebox” became a commonplace reality via readily accessible streaming services, with their parallels in film, TV, and other media, people already tend to take these wonders for granted. We might use them mainly like glorified iPods, for easy access to already familiar everyday soundtracks (see “workout product” above). Or we might dismiss them out of hand the way would-be intellectuals used to boast about not owning a TV. But that’s par for the historical course of technological upheaval. In New Yorker staff writer Kyle Chayka’s new book, Filterworld: How Algorithms Flattened Culture, he cites Proust on the telephone: In Search of Lost Time’s narrator flips from awe to irritation with that miracle device within a blink of its widespread adoption just before the turn of the twentieth century. “Habits require so short a time to divest of their mystery the sacred forces with which we are in contact,” he says, “that, not having had my call at once, my immediate thought was that it was all very long and very inconvenient, and I almost decided to lodge a complaint.”

Likewise, as quickly as we’ve become accustomed to streaming and social media, Chayka writes, we’ve become incurious about their workings, particularly the black-box algorithms that dictate what content they serve us. But in a more obvious parallel, with Filterworld, Chayka is definitely lodging his own complaint. Not that he shouldn’t. There is a lot to be unhappy about. But too often here it’s expressed in the tones of a former enthusiast who’s suddenly encountered his first glitch. Chayka frequently yearns to rewind to a tech phase he was more comfortable with, whether of AOL message boards or of blogging and MP3 downloads (he actually writes, “I have hope for an Internet that’s more like Geocities,” some two hundred pages in). I get the impulse to recover a more democratic online world compared to the top-down dictates of Silicon Valley. But in seeking a more humane tech future, nostalgia is more hindrance than help; the alternatives will need to be legible to new generations weaned on social-media feeds.

Chayka does provide a robust survey of many of the essential issues, in six brisk chapters that strike a readable balance between cultural theory, feature-style reporting, and hot (sometimes overheated) takes. The cultural domination of a handful of streaming and social platforms has transformed the landscape rapidly and radically. Artists and audiences alike are still struggling to assess the implications. Distressingly, this transition has taken place under a regime of near-monopoly capitalism resembling a globalized Gilded Age. The new cultural robber barons—Google (which also owns YouTube), Apple, Amazon, Facebook (including Instagram), Twitter (now Musk-ified to X), Netflix, Spotify, etc.—originally made their fortunes offering visionary services that allowed users to build their online worlds with a degree of creative autonomy. Once they had people hooked, they began to turn the screws. It’s astonishing to recall that Google’s founders swore early on never to incorporate advertising, because it would inevitably bias and corrupt search results. Before long, of course, Google went ahead and inhaled a giant portion of the advertising market, decimating the ad-funded media that had been there before it, and subjecting all of our interests to its own financial screening.

The focus of Chayka’s critique is the way that, over the course of the 2010s, most platforms transferred primary agency from users to the companies’ internal algorithmic engines. These purportedly deliver personalized recommendations, but above all they direct your attention where the companies and their advertisers want it to go, whether to their own material or simply to whatever kind of content keeps you glued to the stream. (The latest major entrant, TikTok, skipped the first stage and from the jump aimed purely at being the most addictively algorithmic binge machine.) By using their financial power to swallow up any competitors with new ideas, as well as technical dodges and political influence to overcome attempts at oversight, these tech feudalists keep us grazing and clicking within their enclosed estates, even as they yield less and less benefit to clients and more and more to the company.

Since late 2022, the science-fiction writer and brilliant tech-culture analyst Cory Doctorow has popularized a term for that pattern of platform decay—“enshittification.” It perfectly captures the way that using Facebook on your phone feels ever more like searching for a postcard from a friend in an avalanche of junk mail. But it also evokes the intensifying pain of authors dealing with Amazon or musicians with Spotify, as early dreams of reaching wider audiences level out and the rentiers demand greater shares of artists’ meager returns.

Chayka’s attempt at a similar coinage is “flattening.” He argues that in “Filterworld,” as he calls the aggregate space of algorithm-driven platforms (thankfully not quite so often as to drive the reader nuts), what gets pushed to most users becomes blander and blander. The various feedback loops that inform recommendations swerve away from anything that might offend, irritate, or even challenge a user enough to make them click “skip.” Or, worst of all, to close the app. In turn, culture makers become desperate to give the algorithms what they want. That might be lo-fi ambient beats to study by or a vaguely pleasant TV series such as Netflix’s Emily in Paris, a hybrid travel/fashion show and influencer bildungsroman, with just enough plot to reward viewers’ half-attention. On a more intimate level, many people have gone through phases of trying to mimic “Instagram face” in photos (or in extreme cases, to achieve it via cosmetic surgery) or to dub “TikTok voice” over their videos. Chayka quotes a joke Google engineer Chet Haase once made on Twitter: “A machine-learning algorithm walks into a bar. The bartender asks, ‘What’ll you have?’ The algorithm says, ‘What’s everyone else having?’” The algorithms’ processes, Chayka asserts, will always make a dive for the lower common denominator. (Unless, I wonder, they are given other priorities?)

And with that, Chayka leaps to this conclusion:

Even in the short time of their rise, algorithmic recommendations have warped everything from visual art to product design, songwriting, choreography, urbanism, food, and fashion. . . . The twin pressures for creators to inspire engagement and avoid alienation have meant that so many cultural forms have become both more immediately enticing and more evanescent, leaving behind nothing but an atmosphere.

Amazingly, Chayka makes these sweeping statements two paragraphs after discussing Walter Benjamin’s writing on photography in order “to point out that the force of technology shaping culture has been happening forever, and that force is neutral, not inherently negative.” Chayka concedes that “it takes decades, if not centuries, to determine just how a technology has influenced cultural forms. . . . [Only] when a new tool has become unremarkable can its effects be judged.”

Except in this case, apparently, because Chayka then postures that he actually can judge the effects of what’s happening in “Filterworld,” which absolutely is “flattening,” in case you’ve forgotten, and it’s definitely a bad, possibly a cataclysmic, thing. This is what it is like to read Filterworld. Every time this well-informed critic and thinker threatens to acknowledge the limits of his thesis, he suddenly pivots away as if someone just tapped him on the shoulder and reminded him that his job is to sell books. As much as I share his concerns, this book repeatedly made we want to yell back at him for willfully underplaying obvious exceptions and counterarguments.

Chief among these is to what degree Chayka’s “flattening” is anything new. When he writes, “If anything, mass culture lately appears more aesthetically homogenous than ever,” he seems to forget the widespread critiques of mass conformity in the 1950s, for instance. Name almost any significant countercultural movement, from Pre-Raphaelite bohemians to beatniks, hippies, and Thatcher-era punks, and you’ll find a reaction against homogeneity and repression. Not to mention any truly authoritarian or religious society, where the strictures are far more stultifying. In these contexts, it’s hard to get so worked up by Chayka’s recurring bogeyman of the international spread of the Instagram-friendly “Generic Coffee Shop,” with its chillingly blond wood grains and oppressively hipsterish retro industrial lights. Not that global gentrification and cultural displacement aren’t serious issues. But to raise the stakes of his case, Chayka keeps postulating simplistic models of how cultural tastes were disseminated prior to the algorithms.

In chapter 2, “The Disruption of Personal Taste,” he visits a bookstore set up by Amazon in Georgetown in DC during the company’s brief flirtation with brick-and-mortar outlets (I’m unsure if they were in earnest or just a publicity stunt). Chayka understandably contrasts the Amazon store, laid out to display the online service’s bestsellers and most-recommended volumes, with any independent bookstore thoughtfully curated by human owners and staff. But he completely ignores what dominated the retail realm before Amazon, massive chains such as Borders and Barnes & Noble, which were wiping out indies right and left. Chayka says of the Amazon store, “I couldn’t see myself as a buyer . . . because there was no coherent idea of an imagined shopper.” But did Borders have any such coherent concept? He’s idealizing the non-digital consumer/business relationship as if, for instance, Amazon invented the practice of pushing bestsellers, and ignoring all the less-than-liberatory ways that cultural objects were already being pushed on consumers.

In fact, Chayka treats the concept of “taste” throughout with a preciousness I doubt he actually is unsophisticated enough to hold. He cites contradictory definitions, mobilizing Voltaire one moment to say that taste must be cultivated and effortful, and then Montesquieu and Agamben the next to say that taste works by rules we do not (cannot?) know, and that the aesthetic sense “enjoys beauty, without being able to explain it.” He quotes seventeenth-century Japanese artist Kobori Enshu denigrating his own taste compared to a greater artist’s, saying, “I unconsciously cater to the taste of the majority.” Chayka wants to point out that algorithms do the same thing, but he skips past the fact that Enshu found himself falling into cultural lockstep four hundred years ago, without benefit of phone or laptop. The way that taste is influenced by social pressures and fashions is pre-coded into human tribalisms and insecurities. More optimistically, it comes from being social animals who want to connect and share our enthusiasms and aversions with others. Academic studies have shown for decades that people will gravitate toward arbitrary culture objects they’ve never seen before, if they’ve been told one is more popular than the other. And marketers, publicists, TV and radio programmers, and the likes have always used that dynamic to manipulate audiences, pushing testimonials and box-office numbers. Instagram might be the immediate cause of why photo-op-seeking travelers have inundated Iceland recently. But tourists have always flocked to the places recommended in the guidebooks.

Chayka admits that “it can be difficult to distinguish the organic social code” of taste—composed of “personal preferences, preconceptions absorbed from marketing, and social symbolism”—from what recommendation algorithms do. Yet he insists that “it is vital to do so.” I wish he had persuaded me why. Instead, I think it’s vital to be conscious and critical of whatever systems are imposing tastes on people in any given society, however they’re delivered. Yes, algorithms and AI have been shown to carry forward the destructive prejudices (sexist, racist, and so on) of the societies that supply their data. But if we know that, mightn’t it be possible to revise their codes to counteract those effects, more easily than among the population, given the political will? Likewise, if algorithms are failing to place enough emphasis on complexity, depth, and surprise in their cultural offerings, as opposed to familiarity and superficial pleasures, it might be more a people problem than a tech issue. Artists and aesthetes have been complaining about the same cultural problem for decades, maybe centuries. I stand alongside Chayka in looking for strategies to pushing in the other direction. But I can’t help rolling my eyes when he talks as if it’s any kind of novelty for artists (much less “influencers”) to find their visions stymied by commercial demand. Again, one advantage of the algorithmic version may be that it’s externalized in ways that become easier to spot and critique.

Chayka keeps harping on the flattening and mass normalization of content, but less frequently admits that for years it’s the exact opposite that’s alarmed many people about the algorithms. They are worried about people being siloed demographically and served content that stokes outrage and division. The insurrectionists outside the Capitol that day in January 2021 didn’t get on buses because they’d been lulled into a stupor by too much soothing blandness. Cute animal cartoons and hateful conspiracy documentaries are all the same to the algorithms, as long as you keep watching. Filterworld includes a couple of nods in this direction, like the case of a teenager whose suicidal ideations were fatally reinforced by depression-encouraging social-media feeds. But it never really addresses the very real paradoxes of how algorithms can both flatten contradictions and heighten them.

That adolescent case leads off Chayka’s chapter on the need and potential for “Regulating Filterworld.” Such measures might include forcing the platforms to make their workings more transparent, cutting off self-dealing (as in pushing sponsored products in searches and recommendations), stopping anti-competitive practices, and breaking up tech monopolies. Culturally, it could even include, as Daphne Keller of Stanford’s Cyber Policy Center argues, “forcing platforms to put some veggies in with the all-candy diet that users are asking for.” The European Union has done more than most other legislatures in this direction, but even there, as Chayka writes, arguably most of its reforms still ask more initiative from users (to opt out of surveillance-by-cookie, for instance) than from the companies. In Canada, when the federal government recently attempted to make companies like Google and Facebook compensate the national news media for co-opting their content, Facebook retaliated by blocking links on its platforms not only to Canadian news sources, but to all news. It got some surprisingly zealous support from users and academics who still subscribe to an “information must be free” ideology left over from an online idealism that was really lost long ago. These won’t be easy fights.

The stylistic mode of Filterworld, however, seems to require Chayka to pull away quickly from such sociopolitical, structural approaches and back to a more personal perspective. Here, his prescriptions are much less satisfying. When he looks to the slow-food movement as some kind of model for healthier cultural consumption, I simply have to giggle. Of course, we should all try to be more reflective and deliberate about what culture we take in. Going on a temporary “algorithm cleanse,” as Chayka did, sounds like a fine way to reset. But last I checked, the slow-food folks had made next to no progress in dismantling the destructive force of the industrial food system. Likewise, a couple of times Chayka falls back on saying we should just put the power back in the hands of people who apparently know better, i.e., professional curators. Obviously that kind of deep expertise is indispensable in its place, and it’s been undervalued in recent decades. (I was surprised, in fact, that he didn’t devote similar attention to anyone in his own field and mine, cultural criticism.) But it’s neither feasible nor desirable to reverse the participatory turn in culture from the internet as a whole.

Along with tech reporting, Chayka’s background is in the visual-arts world. And his high-culture biases come through here again and again. When he looks for contemporary digital alternatives, for example, the main sites he profiles are the art-film streamer the Criterion Channel and the classical-music hub Idagio. In ways that I also support, those venues offer more out-of-the-way choices and, best of all, more context for cultural works in the form of liner notes and commentary from artists and critics. But essentially that’s just imagining a better Spotify or Netflix. Much more difficult is dreaming up a better YouTube. User-created content has been the vital engine of twenty-first-century social-media culture. It’s also included hateful memes, doxing, mobbing, ahistoricism, appropriation, false accusations, and rambling sprawl. But none of those are fixed by turning it into anything at which Eustace Tilley would look approvingly down his monocle.

The possibility of a user uprising against “Filterworld” is more hindered than helped by caricatures of popular culture as an empty husk that’s suddenly had its life sucked out by algorithms. It’s true, for instance, that some of the stylistic transitions in Taylor Swift’s past few albums seem to cater to streaming-algorithm optimization. But Chayka just sounds ignorant when he says algorithms no longer leave any room for “storytelling” in pop music, a page after dismissing Swift’s Folklore and Evermore, two albums that were all about telling stories. Likewise, algorithms do partly explain why there’s a lot of monotonous, loop-based pop music out there these days, although it’s also an effect of technologies on the other side of the studio window, as in digital production techniques. But when Chayka chooses Billie Eilish as an example, someone who seemed precisely like an oddball, expressive relief from pop-chart tedium when she debuted, even the oldest of old fogeys might wonder what his problem is.

There are many other reasons the current cultural moment feels particularly washed-out, including the long-term effects of the coronavirus pandemic, which hobbled multiple cultural sectors for months and years, and the necessary slowdown brought by last year’s writers’ and actors’ strikes. Those conflicts reflected that venture capital is sputtering, threatening to reveal that the tech emperors stand naked. Peak TV has finished peaking, and gone are the days when ever-rising stock valuations seemed to enable Medici-like dispensations of cultural largesse. Older artists have had their livelihoods disrupted by digitization for more than two decades, while the following generations understandably seem collectively disheartened by the state of politics, economics, the environment, and their own mental health. Their phone feeds seem to offer more unsolvable terror than false comfort.

Now in his mid-30s, Chayka questions whether the nearly endless availability of cultural content to younger generations has made them value it less, compared to the effort it once took to discover what you might love. But a decade or more ago, I was wondering the same thing about people his age. Before that, perhaps older people thought the same about me. As media keep expanding, I suspect the truth is that young people are always creating meaning, not less than before, but differently.

As ever, with any vast structural problem, there is no individual solution. So as supplementary reading to Filterworld, my personal algorithms (the ones in my head) would recommend Eric Drott’s new Streaming Music, Streaming Capital, which focuses more squarely on the structural effects of platform capitalism, aka “noncommodity fetishism.” For smaller-scale inspiration, I would turn back to Glenn McDonald, who has his own book coming from Canbury Press later this year, called You Have Not Yet Heard Your Favourite Song: How Streaming Changes Music. Instead of simply recoiling from them, we need to think about how we can make these tools work for us rather than against us.

Frankly, as a high-information cultural consumer whose own tastes were well established before any social media came along, I rarely experience the most intense downsides of algorithms directly. I use likes and blocks and muting to ensure my social-media feeds are near-bucolic. I switch off autoplay. And I usually go to streaming services with my own agenda rather than following the platforms’ streaming suggestions. When the enshittification of Netflix started to feel out of control, I just canceled my subscription. (Like Chayka, I am enough of a snob to recommend substituting Criterion.) But I still love building playlists and sharing them on the platforms, the way I once traded mixtapes in high school.

I wonder if Chayka is experiencing the worst of the algorithms partly because he’s just now moving into middle age. That’s the point when most people all of a sudden “discover” that something has gone wrong with pop culture. It is when nostalgia comes to grab us by the neck. And Chayka also tends to share the aesthetic he ascribes to the algorithms: his previous book was about minimalism; he already favored ambient music; and he admits that he seeks out hipster cafés to work in whenever he travels. So if Chayka particularly feels the walls closing in on him, maybe it’s because the call is coming from inside the Generic Coffee Shop.

One of the most useful concepts Filterworld plucks out of academic discourse is that of “algorithmic anxiety.” It can range from just plain FOMO to the fear that your whole livelihood might hinge on appeasing the mysterious gods inside the black box, so that your messages find an audience. As Chayka writes, it can feel like facing an exam nobody really knows how to study for. Like him, I have gone along haplessly for years with the “folk theory” that Facebook prefers it when journalists post links to their articles not in the main body of the status update but down in the comments. (Or at least I did until Canadian Facebook started blocking news links.) Even if long stretches of Filterworld seem as much symptomatic of algorithmic anxiety as they are any cure for it, I appreciated the companionship in the affliction. And whether in books or in social media, there’s nothing wrong with a vigorous dispute with a thinker you respect. It’s more stimulating than rote, one might even say flat, affirmation.

Carl Wilson is the music critic for Slate and the author of Let’s Talk About Love: A Journey to the End of Taste (Bloomsbury). He’s based in Toronto.